React SEO: Best Practices To Make It SEO-friendly

Search Engine Optimization (SEO) has become essential to any online presence. It not only impacts the success of a product or service in the marketplace but also affects the revenue and efficiency of any business owner, directly or indirectly. React websites face unique challenges when it comes to SEO due to the complex nature of the framework.

With mobile users accounting for nearly 59% of all global website traffic, businesses recognize the importance of having effective digital marketing strategies that can expand their reach and draw in a larger audience than originally anticipated. Single Page Applications (SPAs) offer a viable solution to this problem, allowing companies to boost their reach and gain access to untapped markets.

Understanding Google Bot WebPage Crawling

To optimize the SEO of a React web app, it is essential to comprehend how Google bots work and the common difficulties React solutions encounter. Google utilizes bots to rank websites, scanning your website’s pages and discovering new ones. You can avoid having too many bot requests by specifying the pages you want to be crawled in the robots.txt file.

React applications are single-page applications, making it hard for the bots to crawl the pages. Technologies like React Helmet and server-side rendering can be used to ensure that the bots can access and index the pages effectively. It is also important to structure the React web app to enable bots to access the content easily. It can be done by organizing the content logically and using the appropriate HTML tags.

Google Bot crawls webpages by following the links on a page and then downloading the page it finds. It is done by using a process known as web crawling. The bot then reads the content of the page and stores it in an index, which is then used by the search engine to provide relevant results when a search query is made. It is important to note that the bot only reads the HTML code, not the JavaScript code, which can make it difficult for React websites to be indexed correctly.

Why is SEO Compatibility Important?

Search engine positions are incredibly vital nowadays. Studies show that 95% of all web users go to websites displayed on the initial page of Google’s results. If you want your app to be noticed by those who will utilize it, you must enhance it to be search engine friendly.

A recent study revealed that the top 5 search engine results page (SERP) listings account for over 65% of all web traffic. It demonstrates the significance of achieving a high ranking on Google.

Major SEO Challenges for React SPA Pages

When the crawler first visits a React single-page application, it is greeted with a blank page. The application elements appear on the screen as the HTML and JavaScript codes are slowly loaded.

In addition, various other issues must be addressed for a React single-page application to succeed. Let us discuss some issues:

1. Site mapping

A sitemap is a document outlining the structure of a website, including its videos, pages, and other branches. It helps Google easily crawl through the site and understand its contents. Unfortunately, React does not have a built-in method for generating a sitemap. If you use React Router to manage a route, you must find an external tool to generate a sitemap, which may require some effort.

2. Blank First Pass Subject

React apps are known to rely heavily on JavaScript, which can make them difficult for search engines to crawl and index. It is because React uses an “app shell” model, where the HTML page does not contain meaningful content, and instead, JavaScript must be executed to display the page’s content.

It means that when Googlebot visits the page, it sees an empty page, and only after the page is rendered does it get the content. It can create a significant delay in indexing thousands of pages.

3. Metadata of the Webpage

Meta tags are helpful for social media sites and Google to show correct thumbnails, titles, and descriptions for a certain page. However, these websites rely on the tag of the web page to acquire this data.

When a website or app does not use JavaScript for the landing page, React renders all the content, including the Meta tags. It makes it difficult for individual pages to modify their metadata as the app shell stays the same for the entire website or app.

4. Loading Period and User Interface

Completing JavaScript tasks can be incredibly time-consuming, from fetching to executing to parsing. It is even more challenging when network calls must be made to gather the desired information, resulting in extended loading times for users.

When assessing page rankings, Google considers this as long loading times can adversely affect the user experience and result in a lower ranking.

Methods to Make React SEO-friendly

React websites can be optimized for SEO during development, ensuring they are search engine friendly. Incorporating React for SEO best practices into the design and coding of websites will help to boost their rankings and draw in more organic traffic. Let us discuss some of the tricks to developing React apps with an SEO-friendly approach.

1. Prerendering Technique

Prerendering is a good way to ensure that a React single-page application complies with search engine protocols. It involves pre-generating and storing the HTML and CSS scripts and storing them in the cache memory.

An algorithm then checks if the website request is from a user or a Googlebot. The browser will load the HTML file as usual if it is a user. If it is a bot, the HTML script stored in the cache memory is rendered instead, significantly reducing the loading time and eliminating the possibility of a blank page appearing.

Pros:

- Simple implementation

- Compatible with trending websites innovations

- Requires less codebase variation

- Executes every JavaScript file by translating it into static HTML.

Cons:

- No free services

- It takes longer time for large data files

- You need to generate a new version of the page each time you change its content.

2. Isomorphic React Practices

Isomorphic JavaScript Technology can automatically recognize if JavaScript is enabled or disabled on the server side. If disabled, Isomorphic JavaScript can take over on the server side and deliver the resulting high-quality content to the client-side server.

Once the page is loaded, all the necessary content and features are instantly available. With JavaScript enabled, the website behaves like a dynamic application, with multiple components that load quickly. It results in a smoother user experience compared to a regular website, providing a more enjoyable single-page application experience.

3. Server-side Rendering

To develop a React Web application, you must know the distinctions between client-side and server-side rendering. Client-side rendering involves the browser receiving empty HTML files with limited or no content. In contrast, server-side rendering is a process where the server responds to a request with a completely rendered HTML page. Having a grasp of these two concepts is essential for creating a React Web application.

JavaScript code downloads the content from the servers and makes it available on the user’s screen. However, client-side rendering can create difficulties for search engine optimization (SEO) as Google’s web crawlers can either not see the content or see less content that has not been indexed correctly.

In contrast, server-side rendering provides browsers and Google Bots with HTML files that come with the complete content. This makes it easier for Google Bots to index and achieve higher rankings.

Pros:

- It offers optimization for social media pages

- Top-notch SEO compatibility

- Provides immediate page availability

- Improves UI features and functioning

Cons:

- Slower webpage transition

- Complicated catching functions

- Costly than other methods

Tricks to Maintain the SEO Compatibility of React Webpages

When crafting a SPA, developers can utilize one of the popular JavaScript frameworks such as React, Angular, or Vue. According to a recent survey, React is the most popular. Here are some of the tricks to develop SEO-friendly React websites. Let us start with the following:

- Utilizing the URL Cases

Google bots can be tripped up by pages with different URLs depending on the case of the letters, such as /envision and /or Invision. To avoid this issue, it’s best practice to use lowercase when creating URLs.

- Less Use of <a href>

A common mistake in developing SPAs is to use a <div> or a <button> to modify the URL. Although this is not a fault of React itself, it can pose a problem to search engines like Google. Google bots process URLs and look for additional URLs to crawl through <a href> elements. If the <a href> element is missing, Google bots won’t crawl the URLs and pass on PageRank. To ensure Google bots can identify and crawl the URLs, it is important to include <a href> elements for the bots to detect.

- Constantly Solving the 404 Code

If you encounter an error on any page, it will likely display a 404 code. To fix this, it is best to update the files in server.js and route.js as soon as possible. Doing this can drive more traffic to your website or web app.

Final Words

Single-page React applications provide excellent performance, smooth interactions similar to native applications, a lighter server load, and easy web development. SEO issues should not prevent you from leveraging the React library, as strategies are in place to combat this problem. Additionally, search engine crawlers are becoming more sophisticated yearly, so SEO optimization may eventually become a non-issue when using React.

5 Reasons Keyword Research Is Important to Every SEO Campaign

You may have read it a million times, or you may just be reading it now but keyword research is important. In fact, it’s considered one of the most crucial stages of a successful SEO campaign. Search engine optimization is based on optimizing pages for keyword searches. If there are any misinterpretations about what a user is looking for when they type in a search, it can completely throw off your entire campaign.

In order to understand what your SEO company does for you, it helps to understand why the research stage of an SEO campaign is vital to generating a return on your investment. Here are 5 reasons SEO companies put so much effort into keyword research.

Understanding search intent

Search engines are evolving at a rate much faster than ever before. The use of artificial intelligence and machine learning has allowed search engines to pinpoint search intent with extremely accurate precision. This advancement in search engine technology has also led to the ability to determine whether any given web page can answer the intent of a search.

According to Market.us, the Artificial Intelligence Market was valued at USD 129.28 billion in 2022. It is projected to grow at a compound annual growth rate (CAGR) of 36.8% from 2023 to 2032, reaching a value of USD 2745 billion.

Think about it. Google now has statistics on billions of searches where they assess what a user has searched, what they have clicked on and how much time that user has spent on the pages they clicked on. This information is extremely valuable for formulating the most accurate idea of what “the best answer” is to a search query.

To understand search intent is to understand what a user is truly looking for when they type in a specific keyword search. If you know what they want and need to see to completely satisfy that search, you can provide content that addresses their needs at all levels.

Keyword research is the process of identifying the topics, the keywords and the level of credibility required to be the best answer to a search result.

Benchmark the strength of your competition

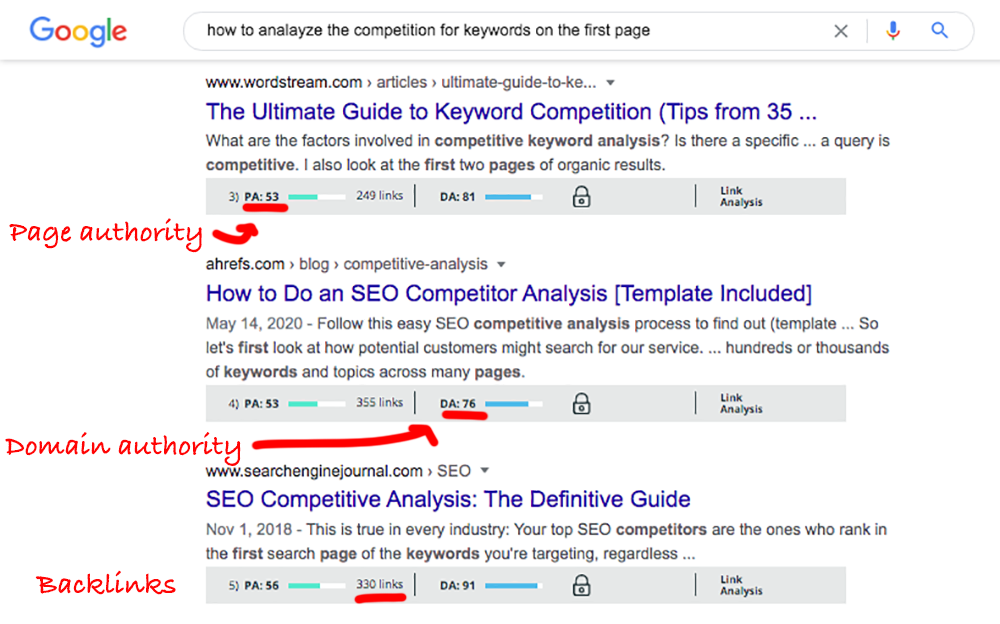

A large part of determining the true intent of a search query is analyzing the pages that are already ranking for that search term. You can extract similarities from the top-ranking pages and pinpoint characteristics that are contributing to being displayed in the top-ranking positions.

For example, if the keyword you’re trying to rank for results in 10 web pages that are massive white paper essays, it’s safe to say that you’ll need extremely long-form content to rank on the first page.

If the first page of search results were all in the form of list-styled content, it would make sense to write a page that’s in the same format.

The competitive analysis goes beyond just analyzing the type of content but also means comparing the backlink profiles. In order to determine the strength of the competition, you need to consider the domain authority and the backlinks pointing to each page.

Keep in mind that domain authority and the number of backlinks have become arbitrary in terms of achieving rank. We are seeing more and more websites being given the chance to appear on the first page of results IF the content is outstanding and optimized to answer the true intent of a search.

If users respond and engage in the content on a lower domain authority website, that website may hold it’s ranking long enough to acquire the backlinks needed to maintain its position. If the content is not engaging, the website is shipped off the first page of results to allow other websites a chance at being “the best answer to search intent.” This process can be attributed to one of the many functions of Rankbrain, Google’s artificial intelligence that plays a large role in first-page rankings.

Nevertheless, the backlinks and domain authority of the top-ranking pages are still major considerations on how to prioritize the keywords in your strategy. There are some keywords that will take longer than others to achieve a first-page appearance. The backlink profile and domain authority of your competition give you insight into how strong your competition actually is for that specific keyword.

Develop keyword options

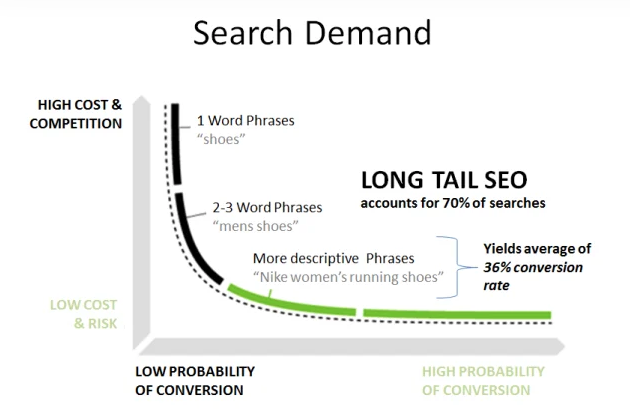

Google reports that there are close to 17% of total searches have never been seen before. This means that the way people are typing in their searches is constantly changing and are, for the most part, long-tail keywords.

Long tail keywords are typically considered three or four keywords strung together in a search. Since the advent of voice search, long-tail keywords are becoming longer with searches becoming more conversational in nature.

In order to come up with the best keyword strategy, you need options.

Although Google ranks according to keyword topic, optimizing for specific target keywords will help your website rank in a top position. Keyword research includes finding the most commonly searched terms as well as a list of the options that serve the same intent. Balancing the strength of competition, the volume of traffic and your current website status is part of the process of establishing a keyword strategy.

Establish a keyword strategy

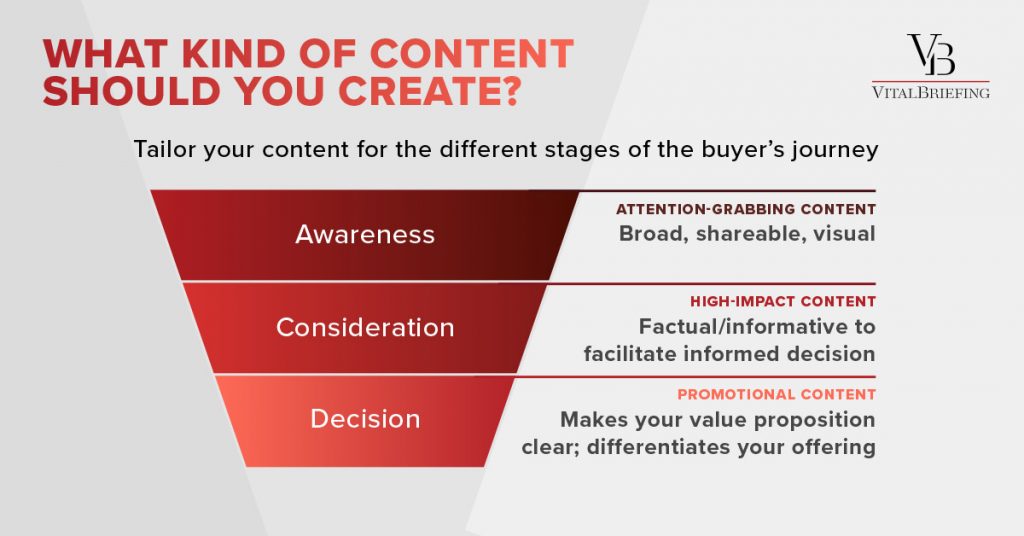

The goal of search engine optimization is to create content that ranks and sends visitors to your site at each stage of the buyer journey. As a lead-generating asset, your website should target keywords that drive traffic at the awareness stage, the consideration stage as well as the decision stage.

Keyword research leads to the discovery of keywords your site currently ranks for which present traffic opportunities. Keywords in “striking distance” are considered to be those in positions 11-20 (which is on the second page of search results). There is much less work to be done on these pages than starting from scratch, so they become short-term goals in your keyword strategy.

Moving a page ranking from the second to the first page can be done by enhancing the content, enhancing the optimization, and building internal and/or external links. Discovering traffic opportunities such as this is typically found during keyword research.

Keywords that require new content or developing a topic cluster fall under the long-term strategy. Since content needs to be published, optimized and have links built to it, it’s a long process to move it to the first page of results.

Ideally, your SEO campaign will include both short-term and long terms goals for driving traffic.

Develop content that drives traffic

The content strategy you implement will closely follow your keyword strategy. You can enhance the content your site publishes by focusing on what users want to know and what they need to know.

A large part of optimizing for ideal keywords is answering commonly asked questions. The process involves breaking down major keyword topics into more focused subtopics that form topic clusters.

A topic cluster consists of pillared content and clustered content. Pillared content covers a broad range of your major keywords. These pages create the “pillars” of your website and are most often service pages, product categories or major themes that can be broken down into many different subtopics.

Clustered content is the articles and posts that solve problems, provide education and are narrowly focused on answering a very specific aspect of search intent. Here is an example of a topic cluster on cakes:

By implementing this type of content structure, your site develops depth and demonstrates expertise in your niche and industry. Keep in mind that Google loves websites that can satisfy search intent. If your site has content that consists of dozens of articles that answer commonly asked questions in detailed articles, you become a strong candidate for keyword-related searches.

As you build valuable content assets within a keyword topic, your site builds credibility and authority and most importantly the ability to satisfy search intent.

Don’t underestimate keyword research

It should be much clearer why keyword research is considered a major foundational practice in developing an SEO campaign. It helps to understand your audience as well as understand search engines in order to create content that performs well in the search results.

Don’t underestimate the power of keyword research. When done correctly, it results in the discovery of the best solutions for driving the most traffic with the least amount of effort.